3 Generative AI Frameworks and Tools for Engineers who work with LLMs

8 May, 2024

As Large Language Models (LLMs) continue to revolutionize the field of Artificial Intelligence, engineers and developers are constantly seeking innovative tools and frameworks to harness their full potential. In this ever-evolving landscape, three standout solutions have emerged as game-changers for those working with LLMs: Hugging Face, LangChain, and LlamaIndex.

These cutting-edge frameworks empower engineers to unlock the true power of generative AI, enabling them to build sophisticated applications, streamline workflows, and leverage private data in unprecedented ways. Whether you're a seasoned AI practitioner or just starting your journey, these tools offer a comprehensive suite of capabilities to elevate your LLM-powered projects to new heights.

Hugging Face 🤗

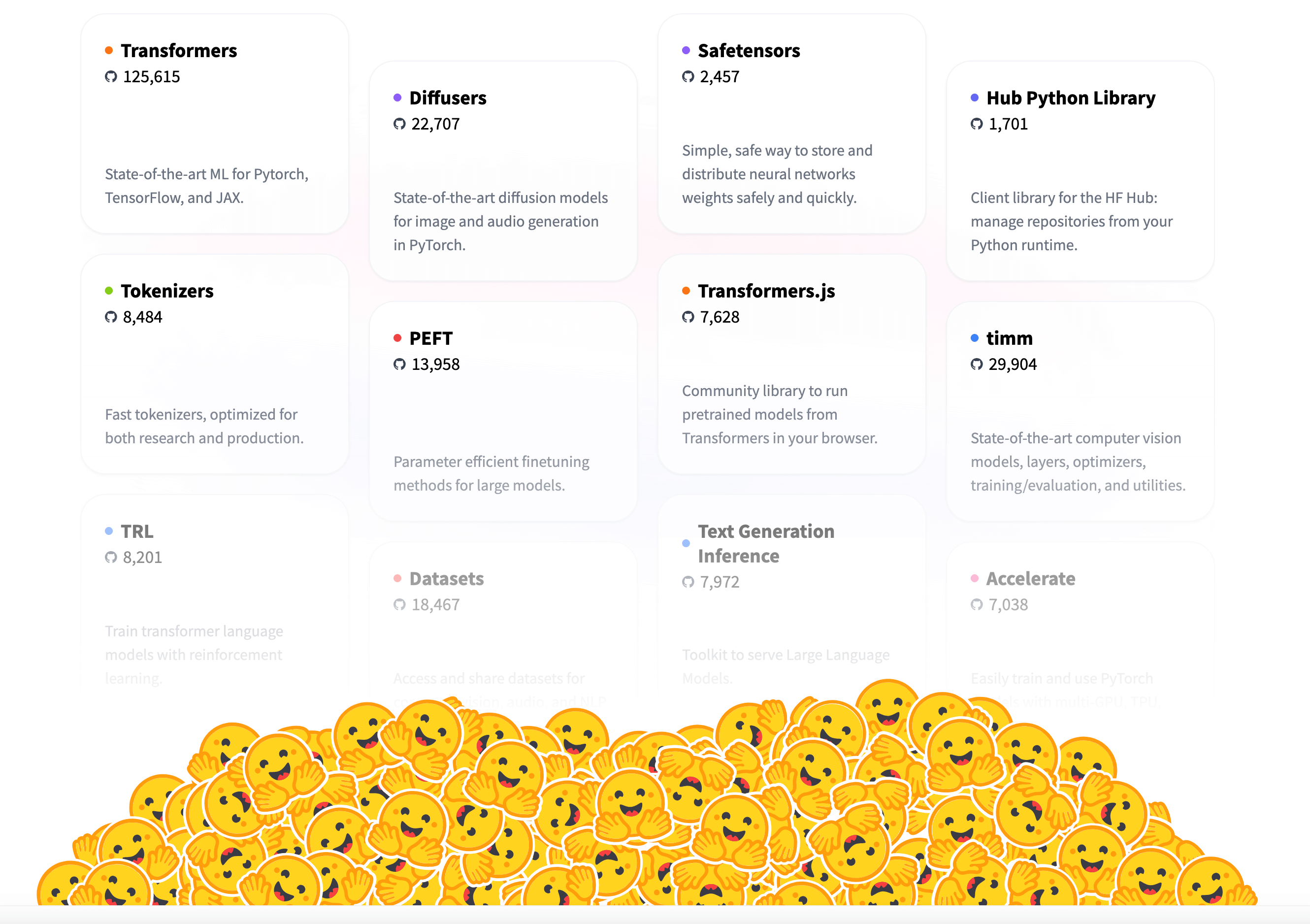

The Hugging Face platform is a game-changer for developers and researchers in NLP and AI. 🌟 At its core lies the "Transformers" library - a powerhouse offering pre-trained models for text classification, question-answering, summarization, and beyond.

With Transformers, you get cutting-edge Machine Learning for PyTorch, TensorFlow, and JAX, complete with APIs and tools to effortlessly download and fine-tune state-of-the-art pre-trained models. 👏 Leveraging these pre-trained models can significantly reduce compute costs, carbon footprint, and the resources needed to train from scratch.

But that's not all! 🔥 Hugging Face is also the go-to destination for the latest Diffusion models, thanks to their Diffusers library. Whether you need image, audio, or even 3D molecule structure generation, Diffusers has got you covered with state-of-the-art pre-trained diffusion models. From simple inference to training your own custom diffusion model, Hugging Face provides the tools you need.

Training and fine-tuning existing models on specific datasets and tasks is a breeze with Hugging Face. 💪 Tailor models to your unique needs, boosting performance and accuracy for targeted applications. Seamless integration with popular libraries like TensorFlow and PyTorch makes AI development a joy.

Hugging Face goes beyond just being a model repository. 🌐 It's a comprehensive platform encompassing models, datasets, tools, and a vibrant community. Explore, build, and share AI applications with ease, fostering collaboration and accelerating cutting-edge model development and deployment.

Key Highlights:

- 🔑 Transformers library: Pre-trained models for NLP tasks

- 🖼️ Diffusers library: State-of-the-art diffusion models for generation

- 💻 Seamless integration with popular ML libraries

- 🏆 Fine-tune models for targeted applications

- 🌍 Collaborative platform for AI exploration and sharing

LangChain 🦜

LangChain is an open-source framework that simplifies the creation of applications using large language models (LLMs). 🌐 As a language model integration powerhouse, LangChain's use cases span document analysis, summarization, chatbots, code analysis, and more – mirroring the versatility of LLMs themselves.

Repurpose LLMs with Ease 🔄

With LangChain, organizations can repurpose LLMs for domain-specific applications without retraining or fine-tuning. 💻 Development teams can build complex applications that reference proprietary information, augmenting model responses with contextual data. For instance, you can use LangChain to create applications that read internal documents and summarize them into conversational responses.

LangChain empowers you to implement Retrieval Augmented Generation (RAG) workflows, introducing new information to the LLM during prompting. 🔍 This context-aware approach reduces model hallucination and improves response accuracy.

How LangChain Works ⚙️

Developed in Python and JavaScript, LangChain supports a wide range of LLMs, including GPT-3, Hugging Face models, Jurassic-1 Jumbo, and more.

To get started, you'll need a language model – either a publicly available one like GPT-3 or your own trained model. 🧠

Once you have a model, LangChain's tools and APIs make it simple to link it to external data sources, interact with its surroundings, and develop complex applications.

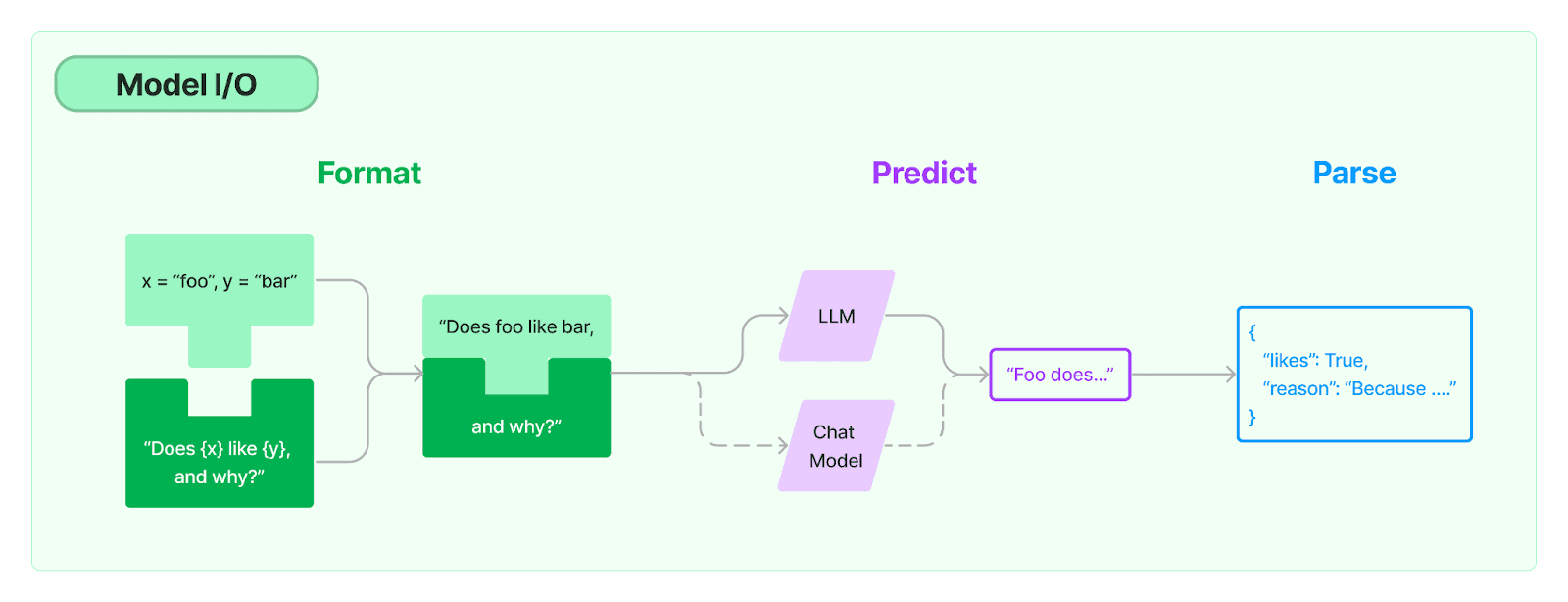

LangChain creates workflows by chaining together a sequence of components called "links." 🔗 Each link performs a specific task, such as:

- 📥 Formatting user input

- 📂 Accessing data sources

- 🧠 Referring to a language model

- 📤 Processing the model's output

These links are connected sequentially, with the output of one link serving as the input to the next. 🔄 By chaining together small operations, LangChain enables you to tackle more complex tasks.

Key Highlights: 🔑

- 🌐 Simplifies LLM application development

- 🔄 Repurpose LLMs without retraining

- 💻 Build context-aware applications

- ⚙️ Supported in Python and JavaScript

- 🔗 Chain components for complex workflows

Unlock the true potential of language models with LangChain – your gateway to AI innovation! 🚀

LlamaIndex 🦙

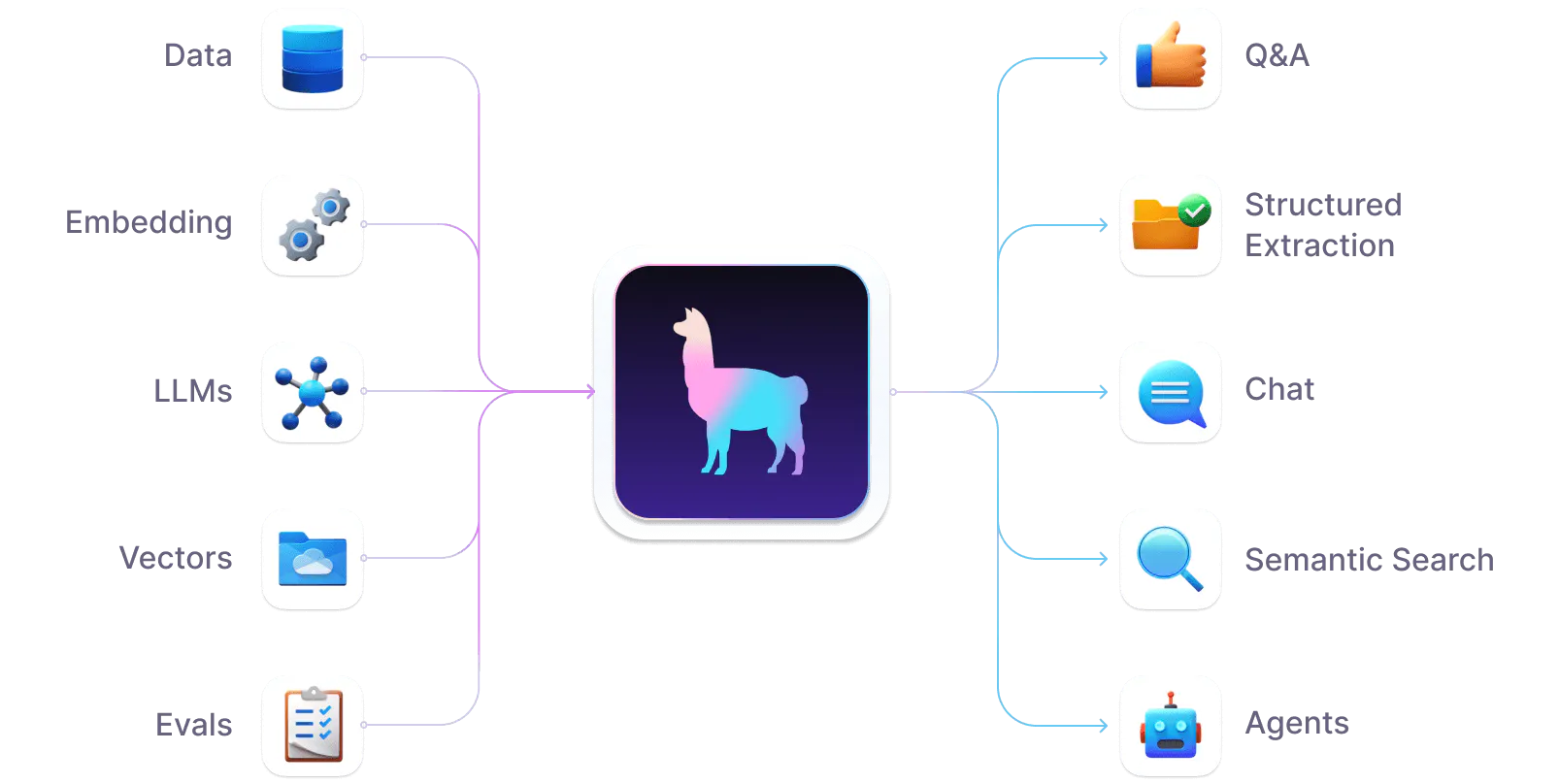

LlamaIndex is a powerful data framework designed to supercharge Large Language Model (LLM) applications with your private data. 📂 While LLMs like GPT-4 come pre-trained on massive public datasets, their utility is limited without access to your proprietary information.

LlamaIndex – your gateway to ingesting data from APIs, databases, PDFs, and more via flexible data connectors. 📥 This data is indexed into intermediate representations optimized for LLMs, allowing natural language querying and conversation with your private data through query engines, chat interfaces, and LLM-powered data agents.

LlamaIndex empowers your LLMs to access and interpret private data on a large scale without retraining the model on newer data. 🚀

Simplicity Meets Customization 🔧

Whether you're a beginner seeking a straightforward way to query your data in natural language or an advanced user requiring deep customization, LlamaIndex has you covered. The high-level API lets you get started in just five lines of code, while lower-level APIs offer full control over data ingestion, indexing, retrieval, and more.

How LlamaIndex Works ⚙️

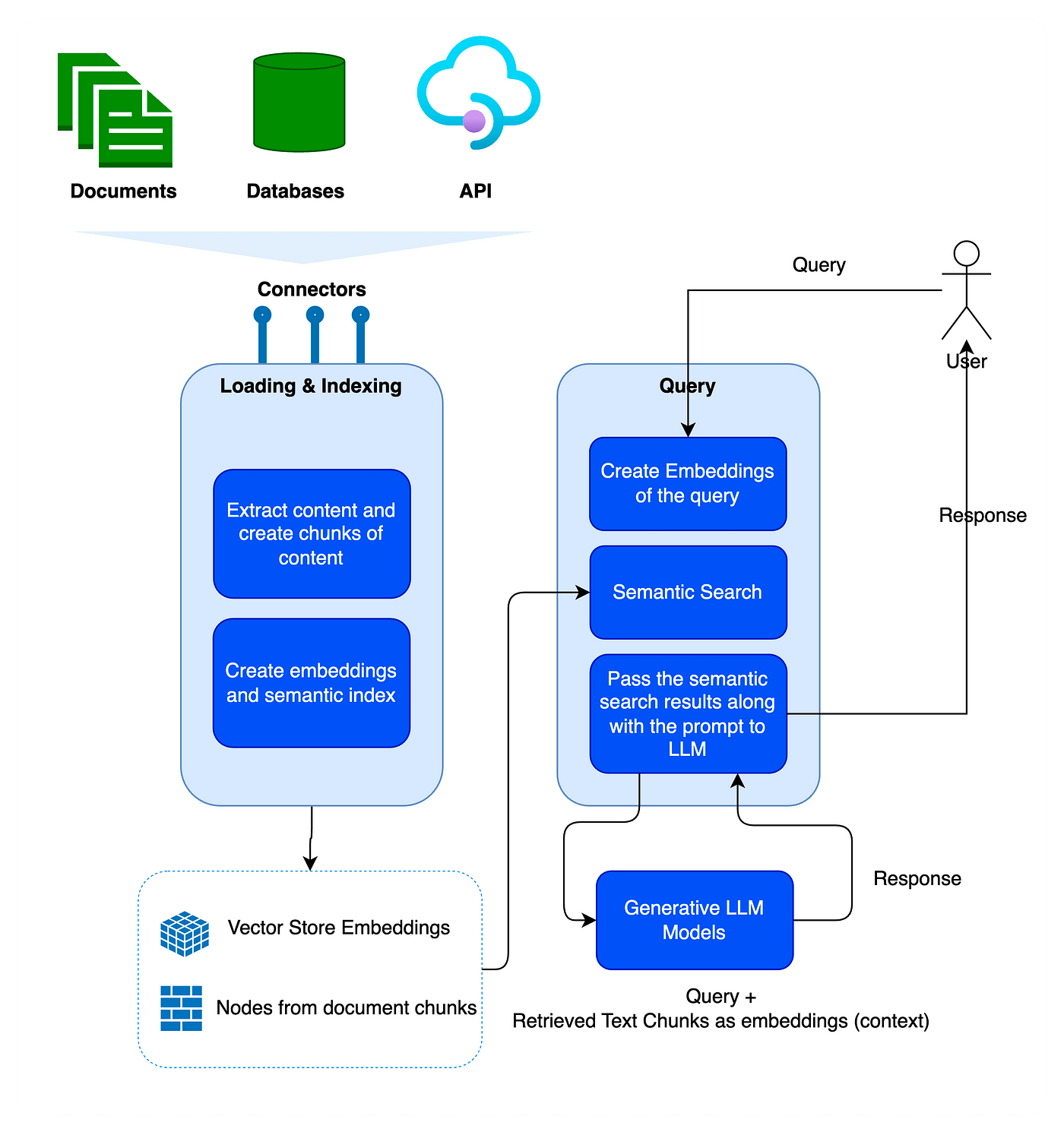

LlamaIndex leverages Retrieval Augmented Generation (RAG) systems that combine LLMs with a private knowledge base, consisting of two stages:

Indexing Stage 📑

LlamaIndex efficiently indexes private data into a vector index, creating a searchable knowledge base tailored to your domain. You can input text documents, database records, knowledge graphs, and other data types. Indexing converts the data into numerical vectors or embeddings that capture its semantic meaning, enabling quick similarity searches across the content.

Querying Stage 🔍

During the querying stage, the RAG pipeline searches for the most relevant information based on the user's query. This information is then provided to the LLM, along with the query, to generate an accurate response. This process allows the LLM to access current and updated information that may not have been included in its initial training, overcoming the challenge of retrieving, organizing, and reasoning over multiple knowledge bases.

Key Highlights: 🔑

- 🔓 Access private data with LLMs

- 📂 Ingest data from various sources

- 🔧 High-level and low-level APIs for flexibility

- ⚙️ Leverages RAG systems for efficient retrieval

- 🔍 Natural language querying and conversation

Unlock the full potential of your LLMs with LlamaIndex – your key to harnessing the power of private data! 🌟

Conclusion:

In the rapidly advancing world of Generative AI, Hugging Face, LangChain, and LlamaIndex stand as beacons of innovation, empowering engineers to push the boundaries of what's possible with Large Language Models. By harnessing the power of these frameworks, developers can unlock new realms of creativity, efficiency, and data integration.

Hugging Face's transformative models and collaborative ecosystem, LangChain's versatile workflow automation, and LlamaIndex's seamless integration of private data with LLMs collectively form a formidable trifecta. Embracing these tools not only streamlines the development process but also opens up a world of possibilities, enabling engineers to create groundbreaking applications that redefine the limits of AI.

As the world of Generative AI continues to evolve, these frameworks will undoubtedly play a pivotal role in shaping the future of AI-driven solutions. Embark on your journey today and experience the transformative potential of these powerful tools, paving the way for a future where AI and human ingenuity converge to create extraordinary outcomes.