3 Proven Strategies to Reduce LLM Costs

2 May, 2024

As the adoption of Large Language Models (LLMs) continues to grow, reducing the associated costs has become a critical priority for many organizations. Fortunately, there are several effective strategies you can leverage to achieve significant cost savings without compromising performance. Let's dive into the top 3 approaches that a bedspoke expert would recommend.

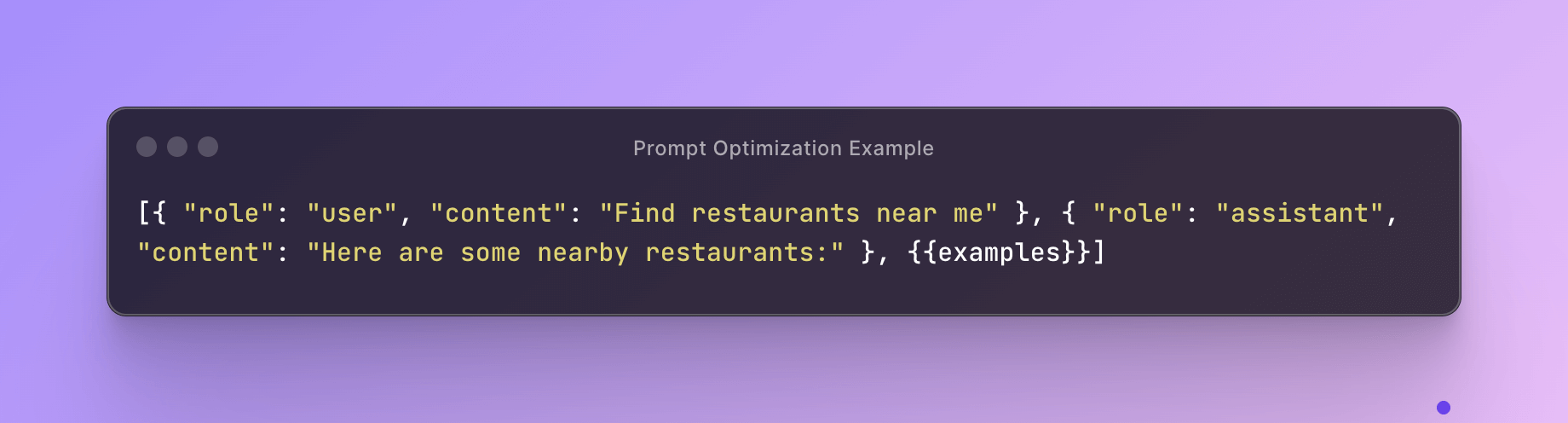

1. Prompt Optimization

The key to reducing LLM costs lies in the prompts you use. By carefully crafting concise and targeted prompts, you can minimize token usage while maintaining accuracy across multiple tasks. For example, if you're building a chatbot to help users find nearby restaurants, instead of using a lengthy prompt with numerous examples, you can create a streamlined one like this:

The {{examples}} section would contain a few carefully selected restaurant names and their addresses. This approach ensures that you only include the most relevant information, reducing token usage and cutting costs by up to 30%1.

2. LLM Approximation

Another effective strategy is to create simpler and more cost-effective LLMs that can match the performance of their more expensive counterparts on specific tasks. By implementing caching mechanisms and fine-tuning models, you can avoid repeated queries to expensive LLMs, achieving similar results while significantly reducing costs by up to 50%.

For instance, in a movie recommendation system, you can use a cache to store the recommendations generated by the LLM. When a user searches for a movie, first check the cache for the recommendation. If it's available, retrieve the result from there, minimizing the need to query the LLM and cutting costs.

3. LLM Cascade

The LLM cascade strategy takes a more adaptive approach, allowing you to dynamically select the optimal set of LLMs to query based on the input. Instead of relying solely on a single LLM, this method enables you to balance performance and cost effectively. Cascade pipeline follows the intuition that simpler questions can be addressed by a weaker but more affordable LLM, whereas only the challenging questions trigger the stronger and more expensive LLM.

Consider a virtual assistant that handles both weather queries and news updates. Rather than using a single LLM for all tasks, you can leverage a cascade approach:

- For weather-related questions, query a weather-specific LLM.

- For news updates, query a different LLM specialized in news.

By dynamically choosing the most appropriate LLM for each task, you can optimize performance and reduce costs by up to 40%.

As a bedspoke expert, I've seen firsthand the transformative impact of these strategies in helping organizations harness the power of LLMs while keeping costs under control. By implementing these approaches, you can unlock significant cost savings without compromising the quality of your applications. Stay ahead of the curve and embrace the future of LLM efficiency.